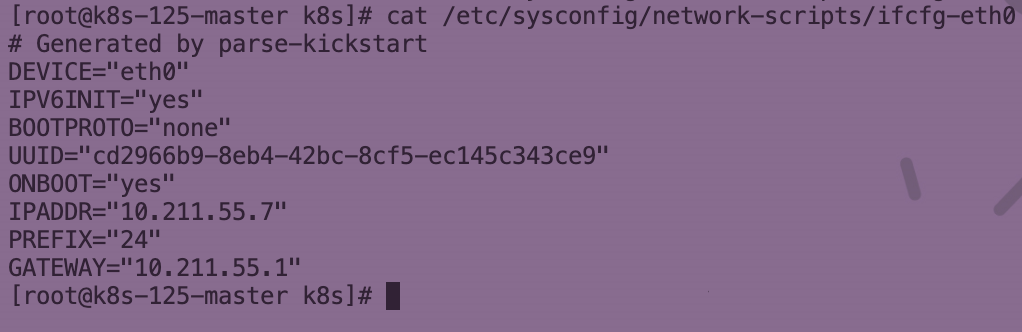

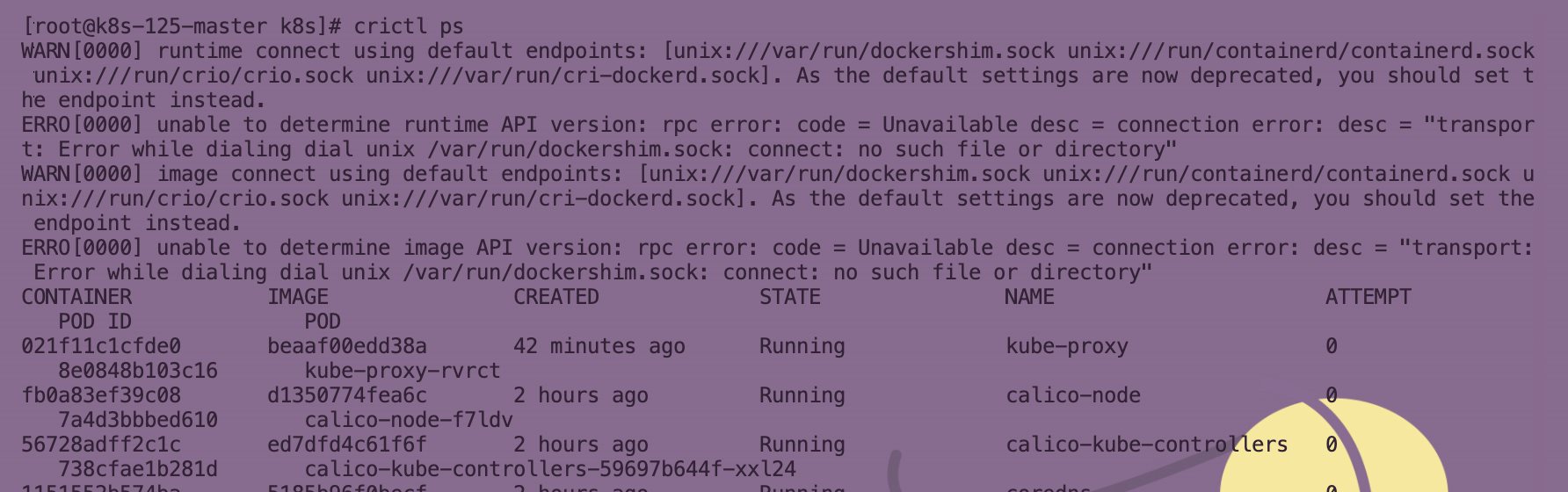

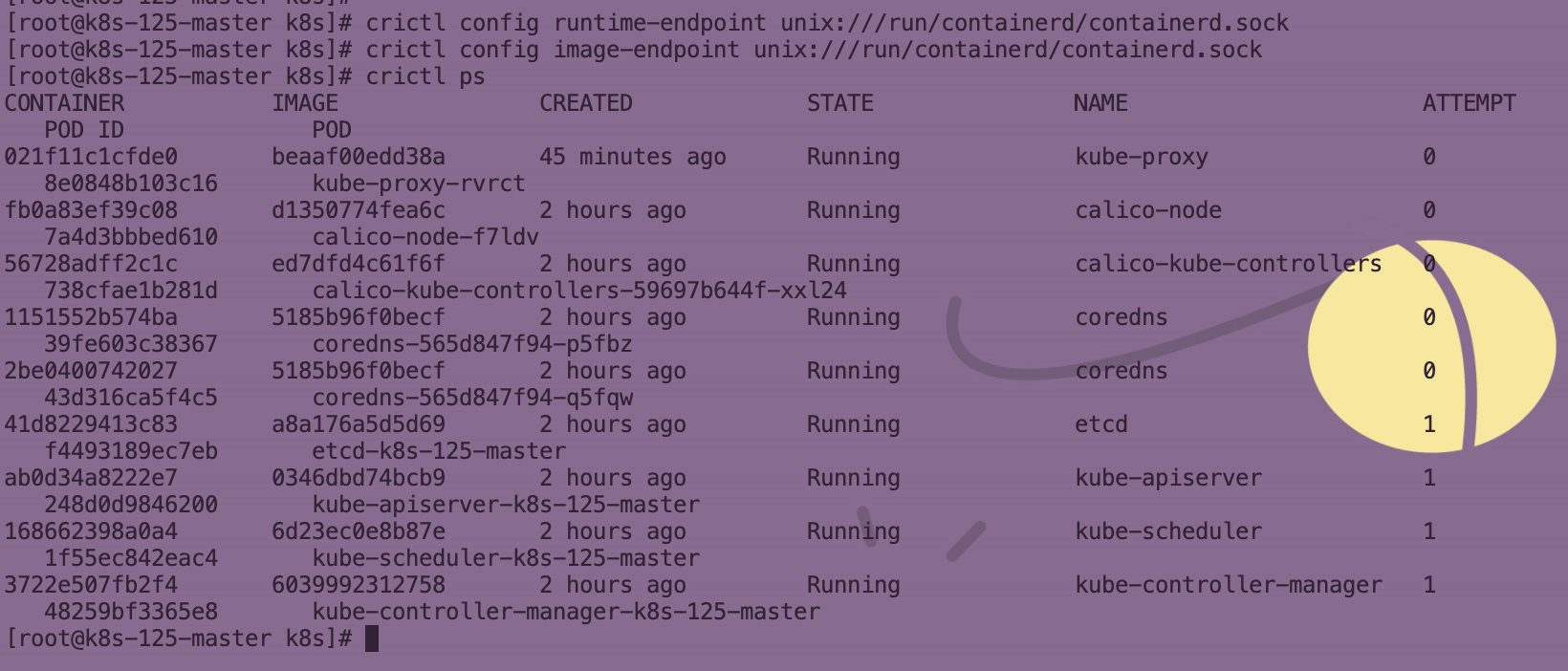

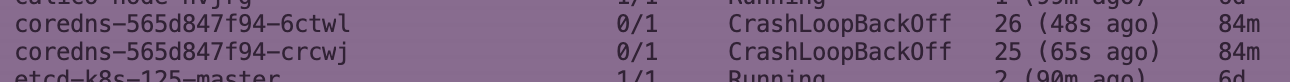

Centos7 安装k8s 1.25.0 电脑版发表于:2022/10/27 15:06  >#Centos7 安装k8s 1.25.0 [TOC] ## 安装包 tn2>链接: https://pan.baidu.com/s/1H3M8fo3hh_qj4bJFifLRIQ 密码: psha ## 安装containerd ```bash yum install ntpdate -y ntpdate ntp.aliyun.com # 修改你的网卡ip设置为静态(我这里是eth0)你们的自己看 vim /etc/sysconfig/network-scripts/ifcfg-eth0 service network restart ``` tn2>将BOOTPROTO参数设置为`none`,并添加好自己的IP与网关地址,网关地址请根据自己的虚拟机为主。 注意设置DNS,我这里没设置不然拉不了镜像,coredns起来不了。 ```bash # Generated by parse-kickstart DEVICE="eth0" IPV6INIT="yes" BOOTPROTO="none" UUID="cd2966b9-8eb4-42bc-8cf5-ec145c343ce9" ONBOOT="yes" IPADDR="10.211.55.7" PREFIX="24" GATEWAY="10.211.55.1" DNS1=114.114.114.114 DNS2=8.8.8.8 ```  ```bash ls clear pwd df -h mkdir /k8s cd /k8s/ # 将我的所有包放到这个目录下 cp containerd.service /etc/systemd/system/containerd.service clear ls tar Cxzvf /usr/local containerd-1.6.9-linux-amd64.tar.gz cat /etc/systemd/system/containerd.service clear systemctl enable --now containerd install -m 755 runc.amd64 /usr/local/sbin/runc tar zxvf libseccomp-2.5.4.tar.gz cd libseccomp-2.5.4/ yum install gperf -y gpg --verify file.asc file ./configure make make install cd .. chmod +x runc.amd64 install -m 755 runc.amd64 /usr/local/sbin/runc runc -v mkdir -p /home/cni-plugins tar Cxzvf /home/cni-plugins cni-plugins-linux-amd64-v1.1.1.tgz mkdir -p /home/cni-tools mkdir -p /etc/cni/net.d cp 10-mynet.conf /etc/cni/net.d/10-mynet.conf cp 99-loopback.conf /etc/cni/net.d/99-loopback.conf tar Cxzvf /home/cni-tools cni-1.1.2.tar.gz cd script/ chmod +x ./*.sh wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo yum -y install jq sudo CNI_PATH=/home/cni-plugins ./priv-net-run.sh echo "Hello World" ``` ## 安装阿里云镜像 ```bash cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF setenforce 0 yum install -y --nogpgcheck kubelet-1.25.3-0 kubeadm-1.25.3-0 kubectl-1.25.3-0 systemctl enable kubelet && systemctl start kubelet kubelet --version # 查看需要的镜像 kubeadm config images list ``` tn2>更具不同的节点进行配置. ```bash hostnamectl set-hostname k8s-125-master hostnamectl set-hostname k8s-125-node1 hostnamectl set-hostname k8s-125-node2 ``` ```bash # 关闭防火墙 #关闭防火墙和关闭SELinux systemctl stop firewalld systemctl disable firewalld setenforce 0 # 临时关闭 vim /etc/sysconfig/selinux #永久关闭 # 改为SELINUX=disabled # 所有节点关闭swap swapoff -a #临时关闭 vim /etc/fstab #永久关闭 #注释掉以下字段 # /dev/mapper/cl-swap swap swap defaults 0 0 # 配置内核 cat > kubernetes.conf <<EOF net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-ip6tables=1 net.ipv4.ip_forward=1 net.ipv4.tcp_tw_recycle=0 vm.swappiness=0 vm.overcommit_memory=1 vm.panic_on_oom=0 fs.inotify.max_user_instances=8192 fs.inotify.max_user_watches=1048576 fs.file-max=52706963 fs.nr_open=52706963 net.ipv6.conf.all.disable_ipv6=1 net.netfilter.nf_conntrack_max=2310720 EOF #将优化内核文件拷贝到/etc/sysctl.d/文件夹下,这样优化文件开机的时候能够被调用 cp kubernetes.conf /etc/sysctl.d/kubernetes.conf #手动刷新,让优化文件立即生效 modprobe br_netfilter modprobe ip_conntrack sysctl -p /etc/sysctl.d/kubernetes.conf ``` ```bash # kube-proxy 开启ipvs的前置条件【极其重要】 # 安装ipvsadm工具 yum install ipset ipvsadm -y cat > /etc/sysconfig/modules/ipvs.modules <<EOF #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 EOF # 添加文件权限 chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4 # 加载 br_netfilter模块【默认是不加载的,所以需要手动加载】 modprobe br_netfilter cd /k8s ctr -n k8s.io images import kube-apiserver-1-25-3.tar ctr -n k8s.io images import kube-controller-manager-1-25-3.tar ctr -n k8s.io images import kube-scheduler-1-25-3.tar ctr -n k8s.io images import kube-proxy-1-25-3.tar ctr -n k8s.io images import pause-3-8.tar ctr -n k8s.io images import pause-3-6.tar ctr -n k8s.io images import etcd-3-5-4-0.tar ctr -n k8s.io images import coredns-1-9-3.tar ctr -n k8s.io images ls crictl images ``` ## master ```bash $ kubeadm init --help # 这样可以 kubeadm init --kubernetes-version=1.25.3 \ --image-repository registry.aliyuncs.com/google_containers \ --apiserver-advertise-address=10.211.55.7 \ --service-cidr=10.96.0.0/12 \ --pod-network-cidr=192.168.0.0/16 # 这样也可以 只是需要修改一下里面的apiserver-advertise-address地址 # 生成文件命令:kubeadm config print init-defaults --kubeconfig ClusterConfiguration > kubeadm.yml kubeadm init --config=kubeadm.yml --upload-certs | tee kubeadm-init.log #注意: # kubernetes-version的值 即为 kubelet --version获得的值 # apiserver-advertise-address这个地址必须是master机器的IP # 国内可以设置阿里云的--image-repository registry.aliyuncs.com/google_containers \ # 如果有报错,可以执行以下命令进行排查【一般都是因为外网原因拉取不到镜像,可以拉取国内镜像代替】 systemctl status kubelet -l systemctl status containerd -l # 查看安装k8s所需的镜像列表 kubeadm config images list # 解决报错后,要再次运行 kubeadm init,必须首先卸载集群 kubeadm reset #成功示例: Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: # 这一行生成的临时令牌[24h过期]是用来加入node节点 kubeadm join 192.168.168.201:6443 --token pq5otc.ker47p9nails0xsf \ --discovery-token-ca-cert-hash sha256:7a256694edafdbd21b52ca729b0b7ebc142c7fe8435657a6115b95019d2a3178 ``` ```bash mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config export KUBECONFIG=/etc/kubernetes/admin.conf # 验证 kubectl get pods --all-namespaces ``` ## 安装网络插件 ```bash curl https://docs.projectcalico.org/manifests/calico.yaml -O # 把calico.yaml里pod所在网段改成kubeadm init时选项--pod-network-cidr所指定的网段 # 我们这里默认就是192.168.0.0所以不需要 # sed -i 's/192.168.0.0/10.244.0.0/g' calico.yaml kubectl apply -f calico.yaml # 验证 kubectl get pods --all-namespaces ``` ```bash # 生成新的令牌节点 kubeadm token create --print-join-command # 在其他节点请执行,加入集群中 kubeadm join 10.211.55.7:6443 --token dhuxe5.oiuoh0ux84dpfs7d --discovery-token-ca-cert-hash sha256:5592e3493fe04e46223023c9232601cb83f3c1d970dd71a3aac357d480087533 ``` tn2>启动 IPVS ```bash # 修改mode 为ipvs kubectl edit -n kube-system cm kube-proxy # mode: "ipvs" # 通过下面的命令删除proxy kubectl get pod -n kube-system |grep kube-proxy |awk '{system("kubectl delete pod "$1" -n kube-system")}' # 然后我们可以查看proxy 发现ipvs已经启动了 kubectl get pod -n kube-system | grep kube-proxy kubectl logs -n kube-system kube-proxy-4c5xj # 也可以这样查看 ipvsadm -Ln ``` tn2>使用`crictl`发现报错。 ```bash crictl ps ```  tn2>原因:未配置endpoints。 指定containerd.sock解决。 ```bash crictl config runtime-endpoint unix:///run/containerd/containerd.sock crictl config image-endpoint unix:///run/containerd/containerd.sock ```  tn2>重启主机后master kubelet报错:unknown service runtime.v1alpha2.RuntimeService 很可能是因为您的 contaienrd 默认配置文件有问题,通常会有一个 disabled_plugins 里面默认配置了 cri。配置文件在 /etc/containerd/config.toml。手动删掉cri;然后重启containerd 即可。 ```bash rm /etc/containerd/config.toml systemctl restart containerd vim /etc/containerd/config.toml ``` ```bash disabled_plugins = [] # 同时建议添加 防止 cgroup 问题 [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] SystemdCgroup = true # 同时建议添加 pause 镜像配置,国内访问不到 默认的地址 [plugins."io.containerd.grpc.v1.cri"] sandbox_image = "k8s.m.daocloud.io/pause:3.7" ``` ```bash systemctl daemon-reload systemctl restart containerd ``` tn2>发现coredns启动不了是由于`/etc/resolv.conf`没有配置出现的问题。 可以通过如下方式进行解决。  ```bash kubectl edit cm coredns -n kube-system ``` ```bash apiVersion: v1 data: Corefile: | .:53 { errors health { lameduck 5s } ready kubernetes cluster.local in-addr.arpa ip6.arpa { pods insecure fallthrough in-addr.arpa ip6.arpa ttl 30 } prometheus :9153 forward . 114.114.114.114 { max_concurrent 1000 policy sequential } cache 30 loop reload loadbalance } kind: ConfigMap metadata: creationTimestamp: "2022-10-27T05:37:37Z" name: coredns namespace: kube-system resourceVersion: "23720" uid: 21950342-42d9-4c0e-a610-ce1e5930a8a5 ``` tn2>然后再删除coredns便重启成功