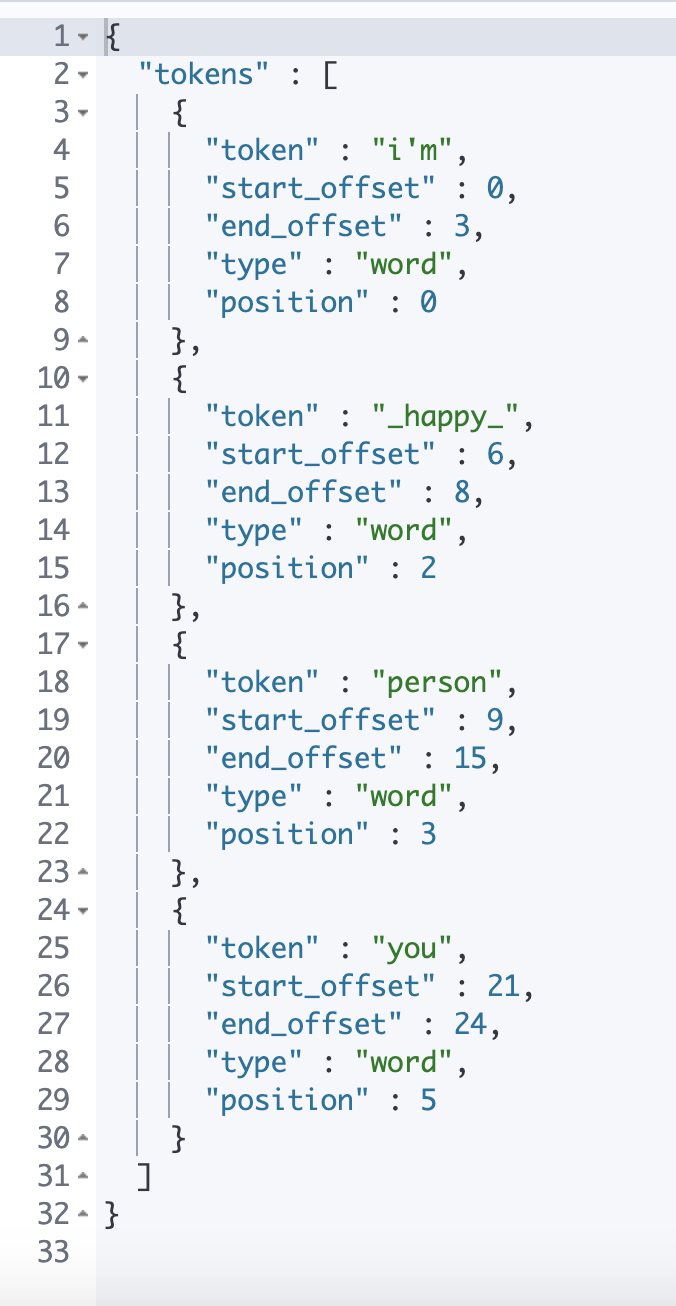

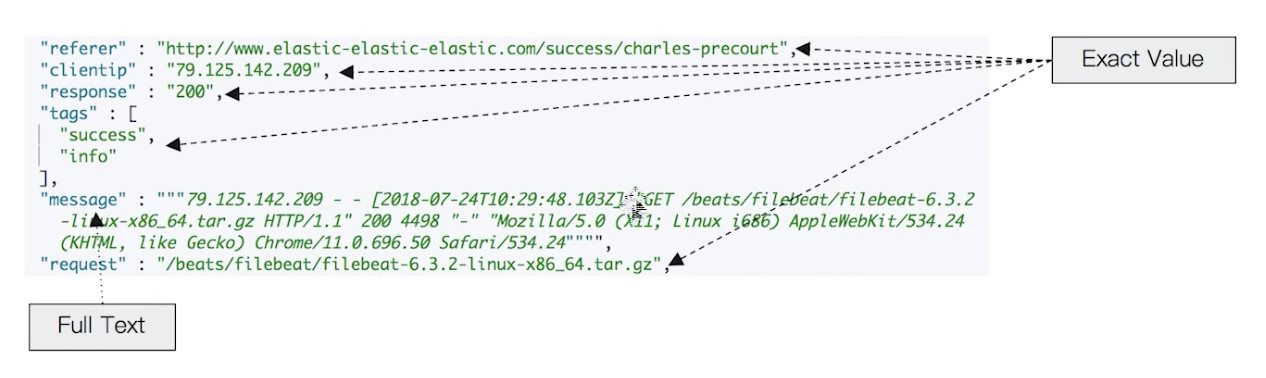

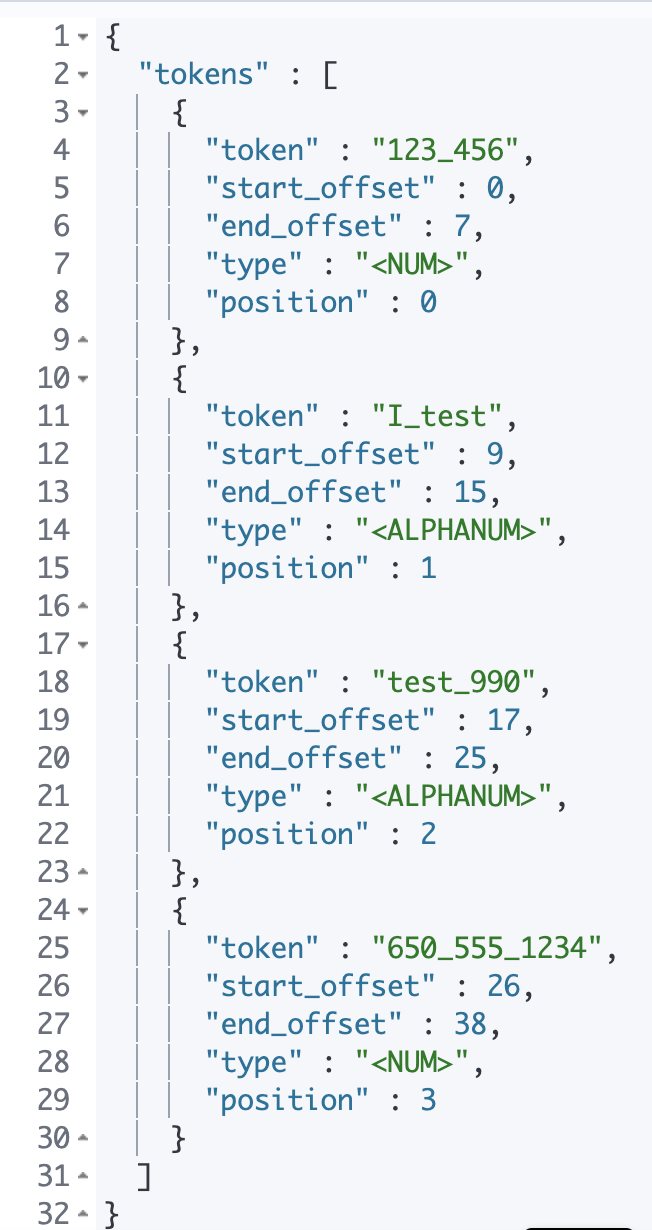

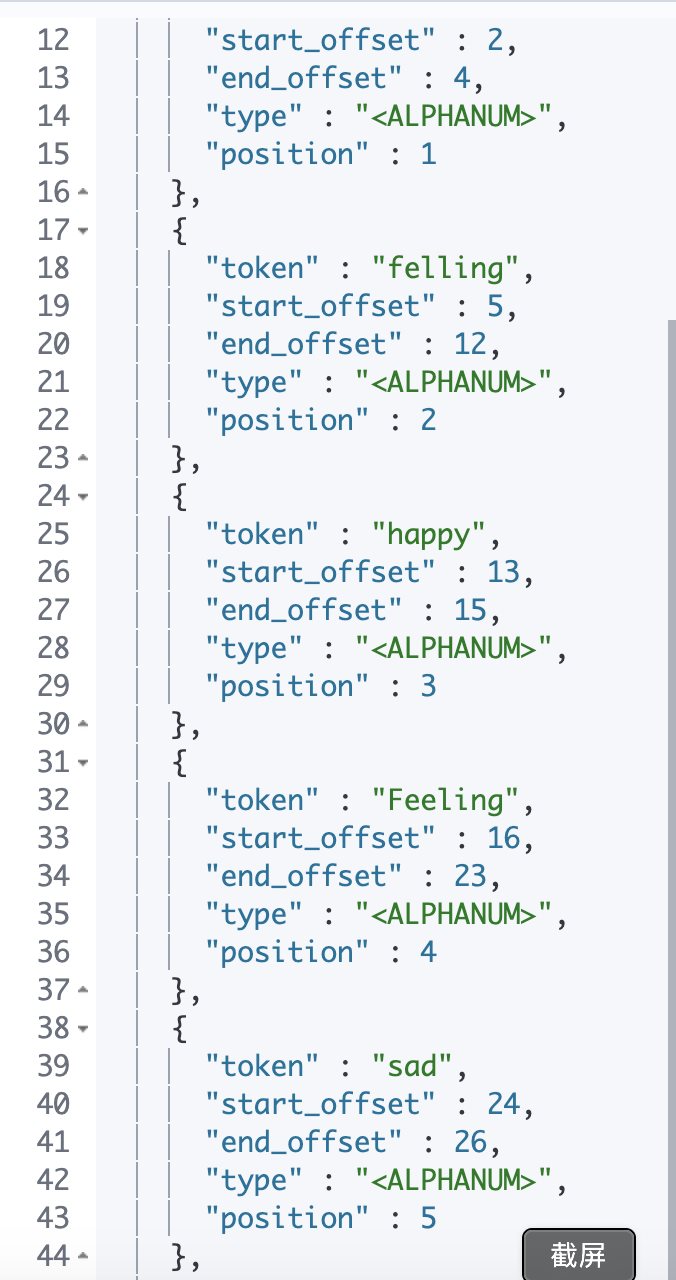

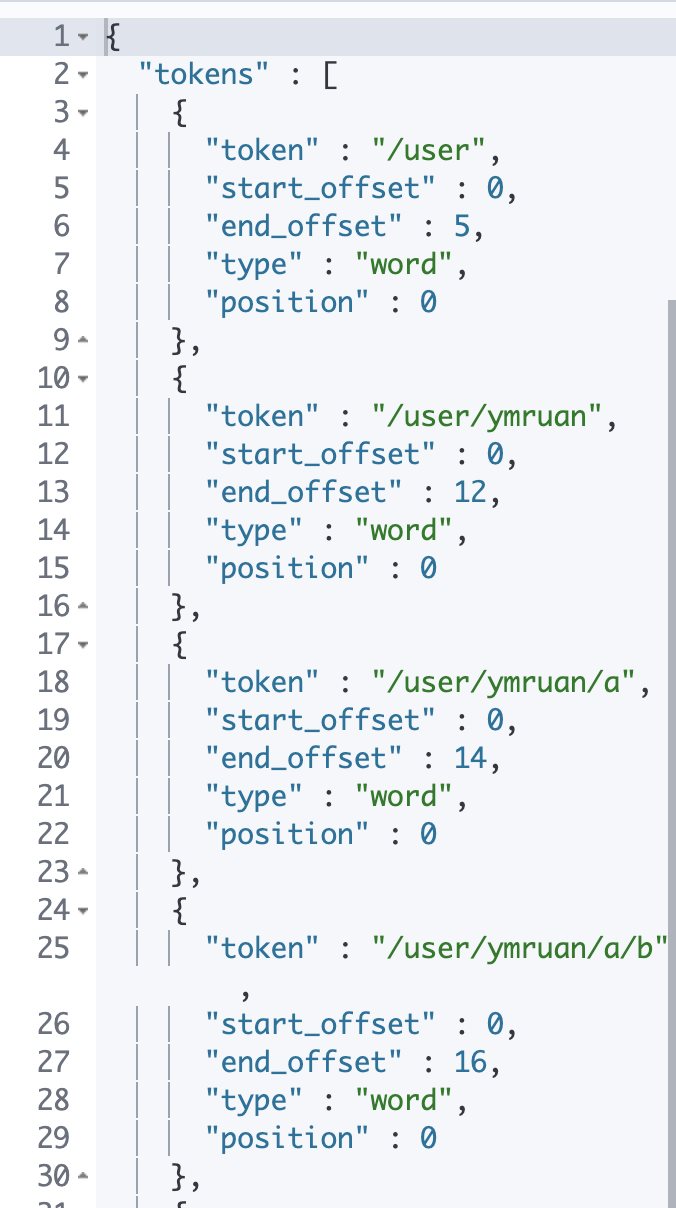

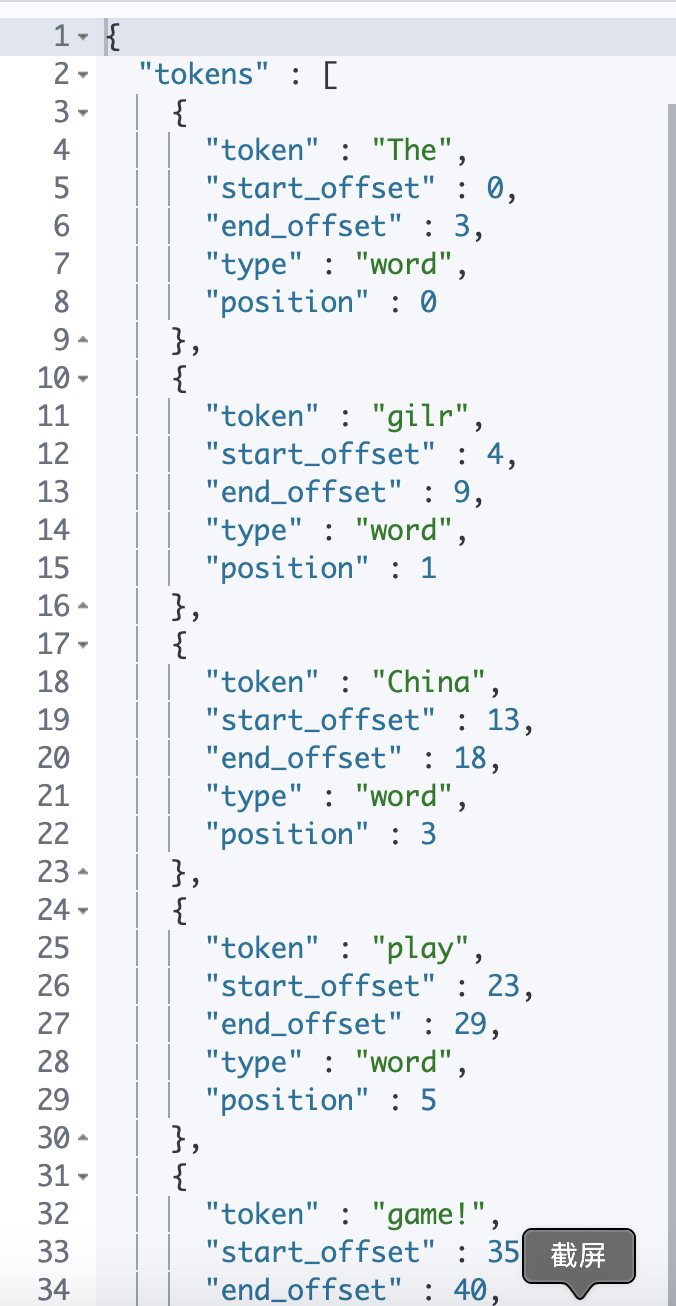

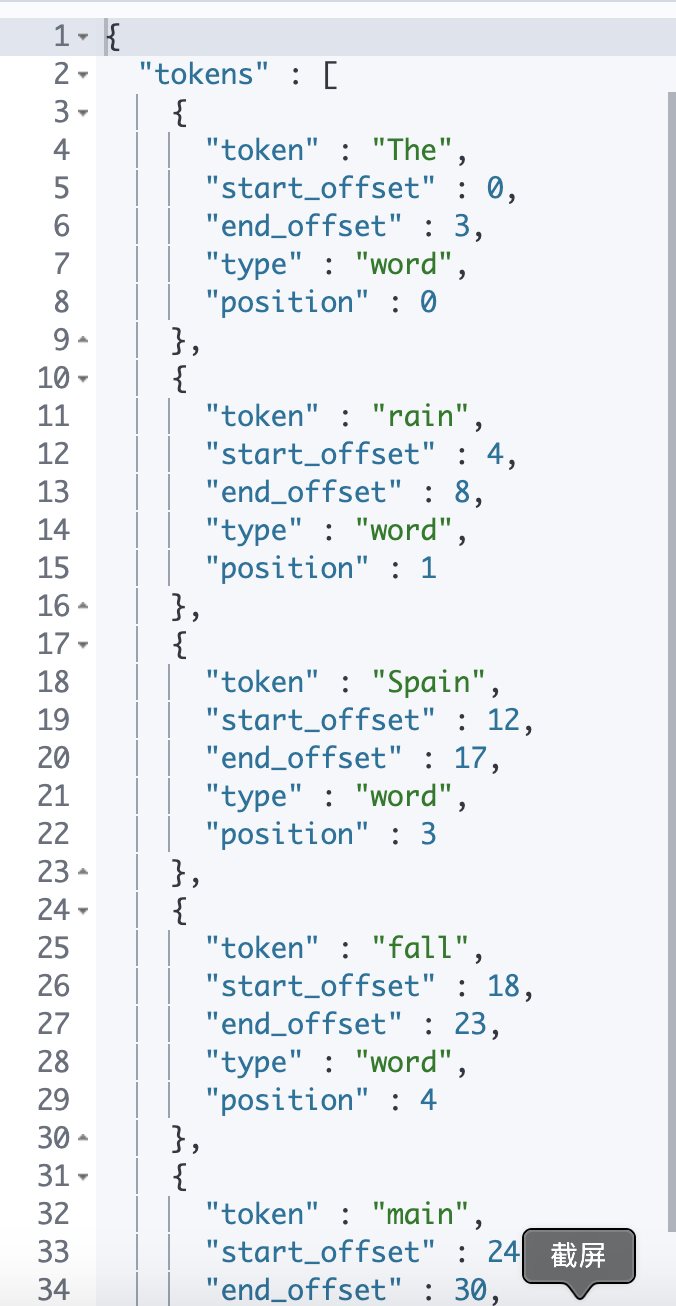

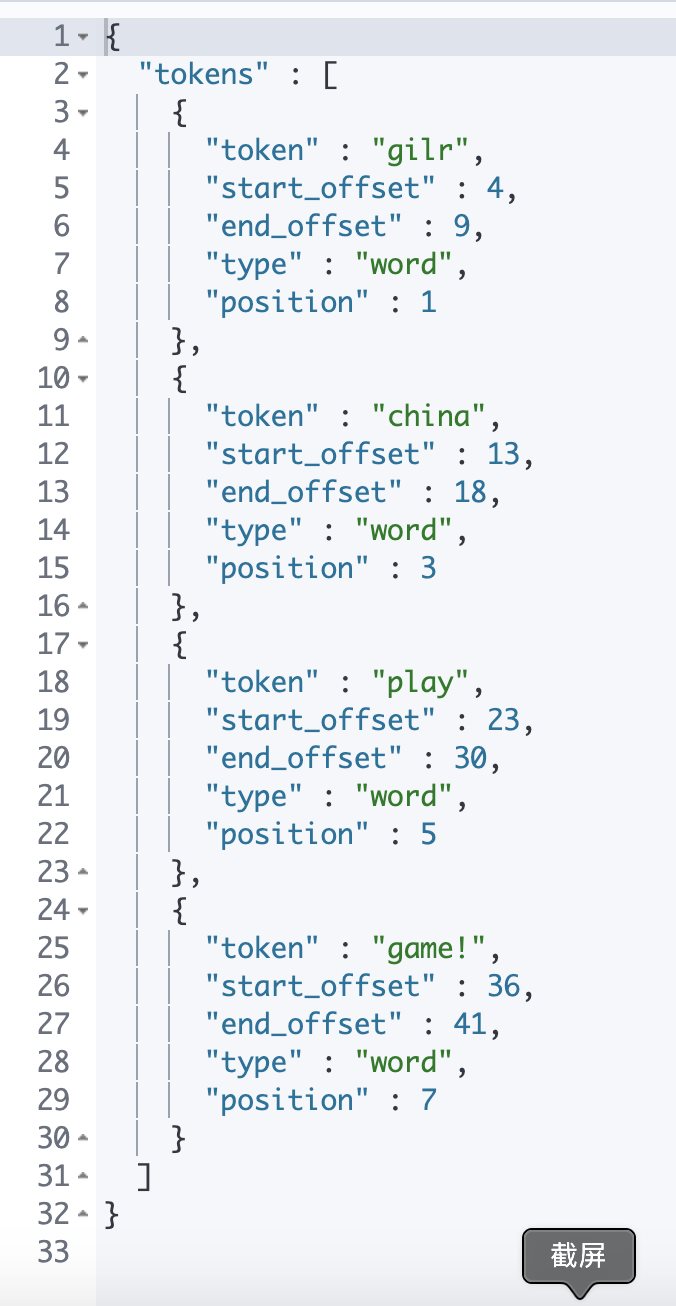

Elasticsearch 多字段特性及Mapping中配置自定义Analyzer 电脑版发表于:2020/11/3 10:41  >#Elasticsearch 多字段特性及Mapping中配置自定义Analyzer [TOC] 多字段类型 ------------ ###多字段特性 tn>厂家名字可以实现精确匹配,增加一个 keyword 子字段 ###使用不同的analyzer 1. 使用不同的语言进行搜索 2. 也可以使用拼音字段的搜索 3. 还支持为搜索和索引指定不通的 analyzer tn>增加子字段的特点,可以为该词有不同语言和语法上的查询 Exact Values vs Full Text(精确值与纯文本) ------------ ###Exact Values (精确值) (keyword) tn>Exact Value:包括数字 / 日期 / 具体一个字符串。一般可以做商品名(例如“Apple Store”) ###Full Text(纯文本)(text) tn>Full Text:非结构化文本数据  Exact Values 不需要被分词 ------------ tn>Elasticsearch 为每一个字段创建一个倒排索引,Exact Value 在索引时,不需要做成特殊的分词处理 例如: 自定义分词器 ------------ tn>当 Elasticsearch 自带的分词器无法满足时,可以自定义分词器。通过自组合不同的组件实现(例如以下3个分词过滤组件) - Character Filter - Tokenizer - Token Filter ###Character Filters >在 Tokenizer 之前对文本进行处理,例如增加删除及替换字符串。可以配置多个Character Filters。会影响 Tokenizer 的 position 和 offset 信息。 一些自带的 Character Filters 有:<br/> - HTML strip - 去除 html 标签 ```bash #不做分词处理的tokenizer #char_filter 中html_strip把html元素给剥离掉了 POST _analyze { "tokenizer":"keyword", "char_filter":["html_strip"], "text": "<b>hello world</b>" } ``` 结果: ```bash { "tokens" : [ { "token" : "hello world", "start_offset" : 3, "end_offset" : 18, "type" : "word", "position" : 0 } ] } ``` - Mapping - 字符串替换 ```bash #使用char filter进行替换 #这里将文本中的-替换成_ POST _analyze { "tokenizer": "standard", "char_filter": [ { "type" : "mapping", "mappings" : [ "- => _"] } ], "text": "123-456, I-test! test-990 650-555-1234" } ``` 结果:  - 还可以替换表情哟!比如下面的代码我将`:>`换成`happy`,`:<`换成`sad`嘻嘻嘻。。。 ```bash //char filter 替换表情符号 POST _analyze { "tokenizer": "standard", "char_filter": [ { "type" : "mapping", "mappings" : [ ":> => happy", ":< => sad"] } ], "text": ["I am felling :>", "Feeling :< today"] } ``` 结果:  - Pattern replace - 正则匹配替换 (这里我们通过正则去掉http://) ```bash //正则表达式 GET _analyze { "tokenizer": "standard", "char_filter": [ { "type" : "pattern_replace", "pattern" : "http://(.*)", "replacement" : "$1" } ], "text" : "http://www.elastic.co" } ``` 结果:  ###Tokenizer >- 将原始的文本按照一定的规则,切分为词(term or token) - Elasticsearch 内置的 Tokenizers - whitespace / standard / uax_url_email / patterm /keyword /path hierarchy - 可以用 Java 开发插件,实现自己的 Tokenizer ###Token Filters tn>`whitespace`类型分词器,主要是针对于空格进行分词,但除此之外还有其他自带但Token Filters >- 将 Tokenizer 输出的单词(term),进行增加,修改,删除 - 自带的 Token Filters - Lowercase / stop / synonym (添加近义词) | 名称 | 描述 | | ------------ | ------------ | | stop | 小写后常规介词给去掉 | | snowball | 把词不同时态变为原型 | | lowercase | 把词变成小写 | tn>`path_hierarchy`分词器基于路径进行拆分的分词器 ```bash POST _analyze { "tokenizer":"path_hierarchy", "text":"/user/ymruan/a/b/c/d/e" } ``` 结果:  ```bash // white space and snowball GET _analyze { "tokenizer": "whitespace", "filter": ["stop","snowball"], "text": ["The gilrs in China are played this game!"] } // whitespace与stop GET _analyze { "tokenizer": "whitespace", "filter": ["stop","snowball"], "text": ["The rain in Spain falls mainly on the plain."] } //remove 加入lowercase后,The被当成 stopword删除 GET _analyze { "tokenizer": "whitespace", "filter": ["lowercase","stop","snowball"], "text": ["The gilrs in China are playing this game!"] } ``` 结果(1):  结果(2):  结果(3):  自定义分词器 ------------ ```bash 自定义分析器标准格式是: PUT /my_index { "settings": { "analysis": { "char_filter": { ... custom character filters ... },//字符过滤器 "tokenizer": { ... custom tokenizers ... },//分词器 "filter": { ... custom token filters ... }, //词单元过滤器 "analyzer": { ... custom analyzers ... } } } } ``` ```bash PUT my_index { "settings": { "analysis": { "analyzer": { "my_custom_analyzer" : { "type": "custom", "char_filter":[ "emoticons" ], "tokenizer":"punctuation", "filter":[ "lowercase", "english_stop" ] } }, "tokenizer": { "punctuation": { "type" : "pattern", "pattern" : "[ .,!?]"//有个空格 } }, "char_filter": { "emoticons": { "type" : "mapping", "mappings" : [ ":) => _happy_", ":( => _sad_"] } }, "filter": { "english_stop" : { "type" : "stop", "stopwords" : "_english_" } } } } } ``` >使用一下 ```bash POST my_index/_analyze { "analyzer": "my_custom_analyzer", "text": "I'm a :) person, and you" } ``` >结果